Nvidia’s research into the field of AI generative models directly has one more dimension than others: a sentence description to generate 3D models.

We live in a 3D world, and although most applications today are in 2D, there has always been a high demand for 3D digital content, including applications such as games, entertainment, construction and robotics simulations.

However, creating professional 3D content requires high artistic and aesthetic literacy and extensive 3D modeling expertise. Doing this work manually takes a lot of time and effort to develop these skills.

The demand is high and it is a “labor-intensive industry”, so is it possible to hand it over to AI? On Friday, Nvidia’s paper submission to the preprint platform arXiv attracted attention.

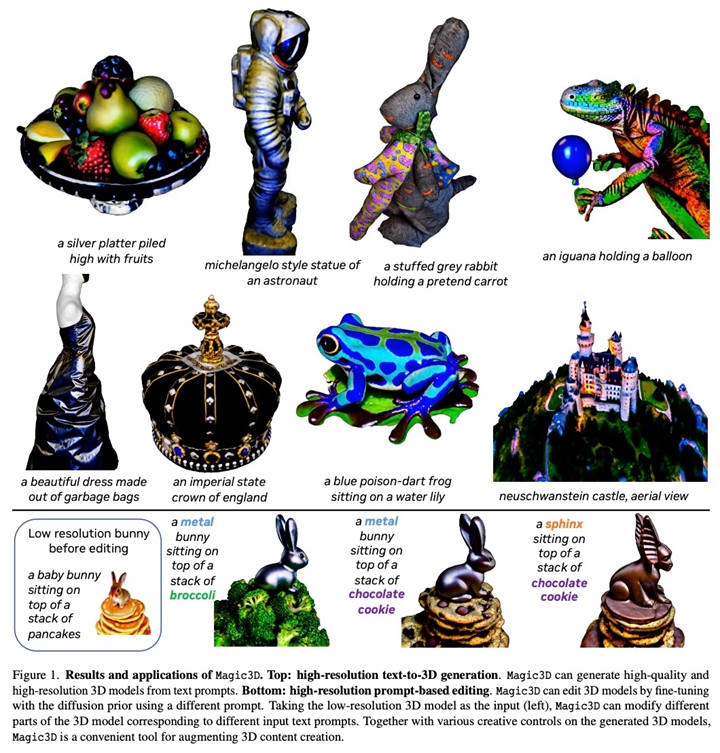

Similar to the now popular NovelAI, people only need to input a piece of text such as “a blue poison dart frog sitting on a water lily”, and AI can generate a 3D model with complete texture and shape for you.

Magic3D can also perform hint-based 3D mesh editing: given a low-resolution 3D model and basic hints, text can be changed to modify the resulting model content. In addition, the authors demonstrate the ability to maintain style and apply 2D image styles to 3D models.

The Stable Diffusion paper was only submitted for the first time in August 2022, and it has evolved to such an extent in a few months, which makes people marvel at the speed of technological development.

Nvidia said that you only need to modify it slightly on this basis, and the resulting model can be used as material for games or CGI art scenes.

The direction of the 3D generation model is not mysterious. In fact, on September 29, Google released DreamFusion, a text-to-3D generation model. Nvidia’s research goal in Magic3D is directly based on this method.

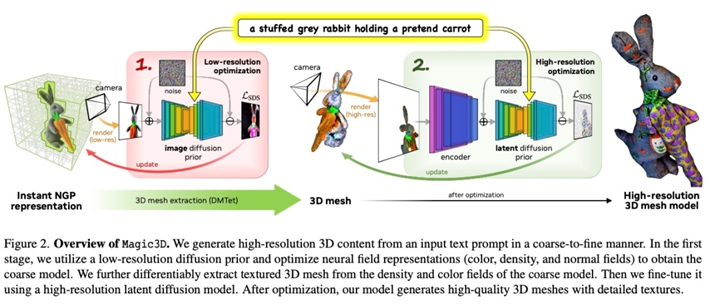

Similar to DreamFusion’s process of generating 2D images from text and optimizing them into volumetric NeRF (Neural Radiation Field) data, Magic3D uses a two-stage generation method, using a rough model generated at low resolution and then optimizing it to a higher resolution Spend.

Nvidia’s method first uses a low-resolution diffusion prior to obtain a rough model and accelerates it using a sparse 3D hash grid structure. Starting with a coarse representation, a textured 3D mesh model with an efficient differentiable renderer interacting with a high-resolution latent diffusion model is further optimized.

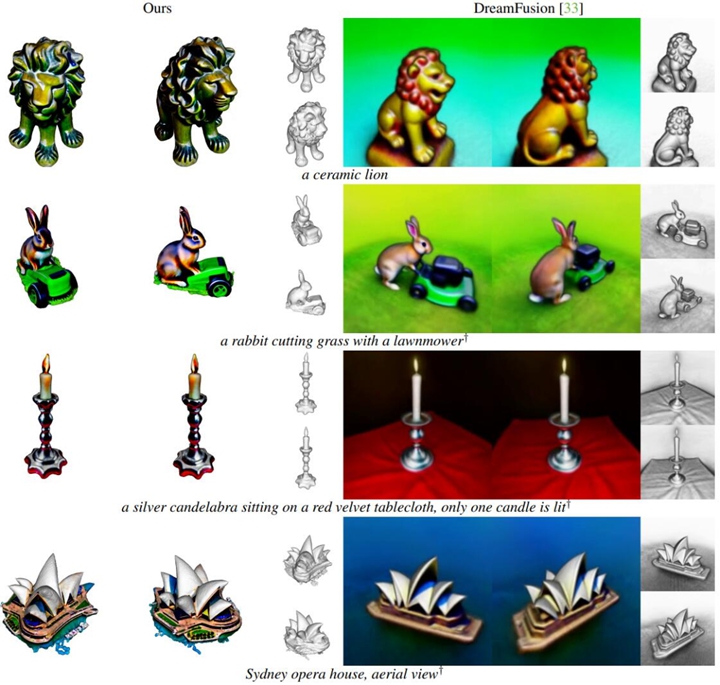

Magic3D can create a high-quality 3D mesh model in 40 minutes, 2 times faster than DreamFusion (which takes an average of 1.5 hours), and at a higher resolution. Statistics show that 61.7% of people prefer Nvidia’s new approach to DreamFusion.

Together with image conditioning generation functions, the new technology opens up new avenues for a variety of creative applications.

technical details

Magic3D can synthesize highly detailed 3D models from text prompts in a short computation time. Magic3D synthesizes high-quality 3D content using text prompts by improving several major design choices in DreamFusion.

Specifically, Magic3D is a coarse-to-fine optimal approach in which multiple diffusion priors at different resolutions are used to optimize 3D representations, resulting in view-consistent geometry and high-resolution details. Magic3D uses a supervised approach to synthesize 8x high-resolution 3D content, also 2x faster than DreamFusion.

The entire workflow of Magic3D is divided into two phases: in the first phase, the research optimizes a rough neural field representation similar to DreamFusion to achieve an efficient scene representation with hash grid-based memory and computation .

In the second stage the method switches to the best mesh representation. This step is critical, allowing the method to exploit diffusion priors at resolutions up to 512 × 512. Since 3D meshes are suitable for fast graphics rendering and high-resolution images can be rendered on the fly, this study utilizes a rasterization-based efficient differential renderer and camera close-up to recover high-frequency details in geometric textures.

Based on the above two stages, the method can generate highly realistic 3D content, which can be easily imported and visualized in standard graphics software.

Furthermore, the study demonstrates the creative control of the 3D synthesis process with text prompts, as shown in Figure 1 below.

In order to compare the actual application effect, Nvidia researchers compared the content generated by Magic3D and DreamFusion on 397 text prompts. The average coarse model generation phase took 15 minutes and the fine phase was trained in 25 minutes, all execution times measured on 8 Nvidia A100 GPUs.

Although papers and demos are just the first step, Nvidia has already figured out the future application direction for Magic3D: providing tools for making massive 3D models for games and metaverse worlds, and making them accessible to everyone.

Of course, Nvidia’s own Omniverse may be the first to launch this feature.

source: