Listen to the audio version of the article

It is clear to everyone that artificial intelligence is reshaping the contours of creativity by expanding them, but also creating quite a few problems in terms of unauthorized use of works of art by AI providers to train their models. Precisely on this front a war is being fought on multiple fronts. The legislative one, necessary to intervene and legislate on the controversial and complex topic of the protection of the work of art; the legal one, with companies such as OpenAI, Meta, Google and Stability AI sued by artists who accuse them of using copyrighted material without consent; and then, more hidden, the technological war declared on AI by software and tools that have the aim of protecting works of art from the scraping process, fighting technology with technology.

The case of Nightshade and Glaze: the algorithm “disturbers”.

In the panorama of the defense of intellectual rights, the announcement of the birth of the Nightshade system has created a lot of expectation towards artists, who increasingly find themselves having to compete with artificial copies of their works, without any authorization. Created by the mind of Ben Zhao, computer science professor at the University of Chicago, Nightshade promises a small revolution. Once made available later this year, it will allow artists to insert invisible changes into the pixels of their works before uploading them online. These changes, imperceptible to the human eye, would be able to “fool” the algorithm causing unpredictable malfunctions in the AI models that are trained with images. In parallel, Zhao’s team created Glaze, an additional protection tool that allows artists to mask their style to prevent it from being imitated by generative AI. It works similarly to Nightshade, changing the pixels of images so that algorithms interpret them as something different than what they actually show.

How data poisoning technology works

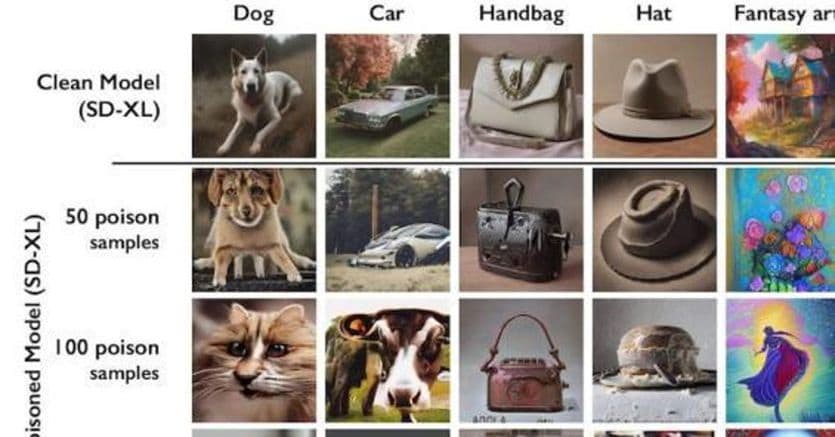

The goal of these “tools” is to create confusion in the algorithm’s learning process in order to damage future iterations of the generative image models, making some of their outputs chaotic and unusable. A technique better known as “data poisoning”, which aims to deceive an Ai model into misinterpreting the category of images. Thus – as can be seen from the image – dogs can be mistaken for cats, cars for cows, a hat for a cake and a bag for a toaster. The power of Nightshade was positively tested on Stable Diffusion models with a set of 50 “contaminated” images, which when fed to the algorithm began to produce distorted results. Despite the effectiveness of Nightshade in instilling a sort of “digital poison” into AI training systems, doubts remain about the possible malicious manipulation of this technique. Zhao, however, reassures: thousands of altered images would be necessary to inflict concrete damage on the larger caliber Ai models. “Nightshade and Glaze could also be the catalyst for a broader transformation in intellectual property enforcement practices by generative artificial intelligences,” said Zao and Junfeng Yang, a professor of computer science at Columbia University, when asked by MIT, said that Nightshade could induce artificial intelligence companies to better respect the rights of artists, inducing them to pay royalties to artists. The moves of AI providers In a scenario marked by continuous legal battles and increasingly incipient concerns on the part of institutions and trade associations, some providers are trying to encourage conciliatory practices. This is the case of OpenAI, which in the updated version of DALL-E 3, has introduced new features to allow artists to remove their creations from the training process and using filters to avoid the generation of images that emulate the style of artists still alive. A step forward, but which many analysts appear to be only a “buffer” measure, given that it does not take into consideration the works already published and absorbed into the AI’s digital archives.