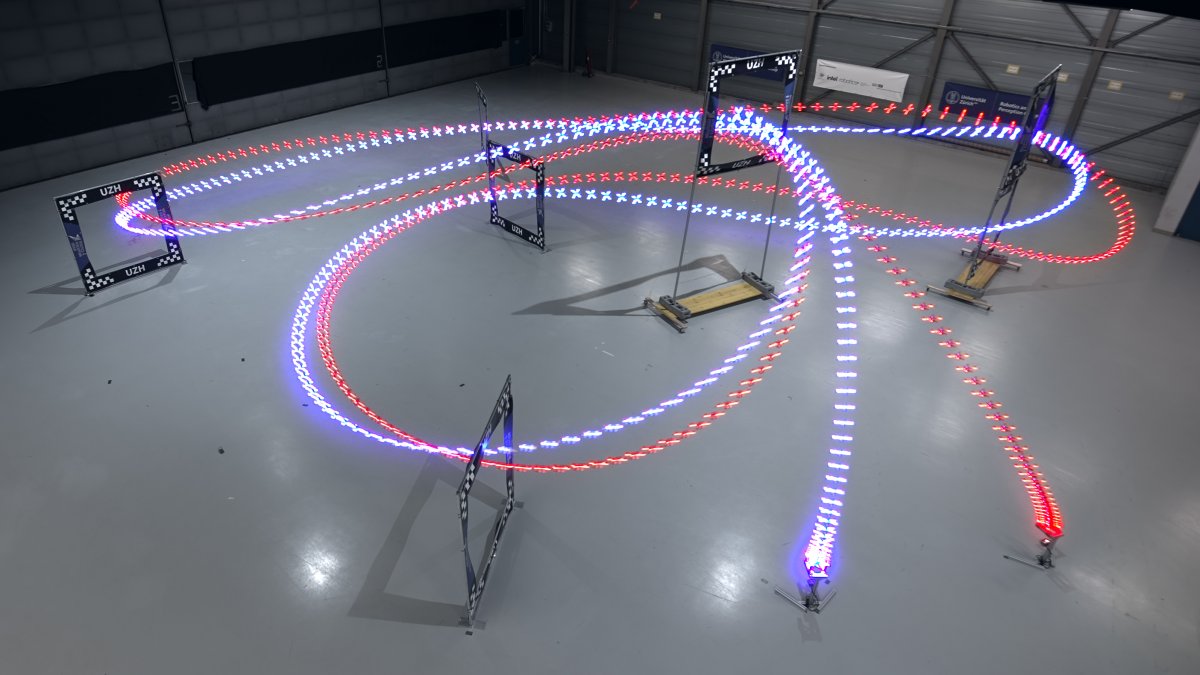

Researchers at the University of Zurich have developed software that steers a quadcopter around a drone racing course faster than professional human pilots. This is the first time an autonomous drone has beaten human pilots at world championship level. In an international competition four years ago – the AlphaPilot Challenge – the best autonomous systems needed around twice as long as their human competitors. The team led by Elia Kaufmann from the University of Zurich has now presented the technical details of the system called “Swift” in “Nature”. Swift competed against a human pilot over a 75-meter course with seven square gates. The three human pilots, who had previously been allowed to train on the race track for a week, won ten out of a total of 25 races.

Advertisement

In drone races, pilots control their camera-equipped machines from a first-person perspective. In doing so, they have to pass through gates on a fixed circuit – and can reach a speed of up to 100 km per hour. Researchers at the University of Zurich led by Davide Scaramuzza, in whose laboratory Kaufman also worked, have previously flown drones through forests at high speeds. But the aircraft were only half as fast.

Keep an eye on the next goal

The Swift system consists of two components: part of the software is responsible for determining the position and speed of the drone and the position of the gates from the camera data from the drone and the signals from the acceleration sensors. A second software part derives the next control commands from position and speed. This part of the software was previously trained in a simulation – with the help of reinforcement learning. The software runs through control sequences – initially selected purely at random – and finally learns through trial and error how to fly the drone through the nearest gate as quickly as possible, while at the same time keeping an eye on the gate after next as well as possible.

Reinforcement learning has proven to be a very successful tool, especially in computer games. However, the software agent to be trained has to play through the game to be learned very often in a simulation. However, simulations differ from real environments. In other words, software that has been trained to fly a drone in a simulation often fails in reality because the sensor data is noisy and incomplete, and the simulation only considers the flight behavior of the drone in an idealized form.

Kaufmann and his colleagues solved this problem by shaping the drone on the race track before training with motion capture cameras running up and then evaluating this data together with the drone’s sensor data. From the combined evaluation, the researchers were able to derive a model of the noise behavior of the sensor data. They then used the position data, which had now been artificially made less precise, in the simulation to train the control software. As elegant as the technique is, however, this is also its greatest limitation. Because it depends on the specific route and the drone used. In order to be able to adapt it to other circumstances, one would have to let the analysis and the training take place under many different conditions.

Advertisement

(wst)

To home page