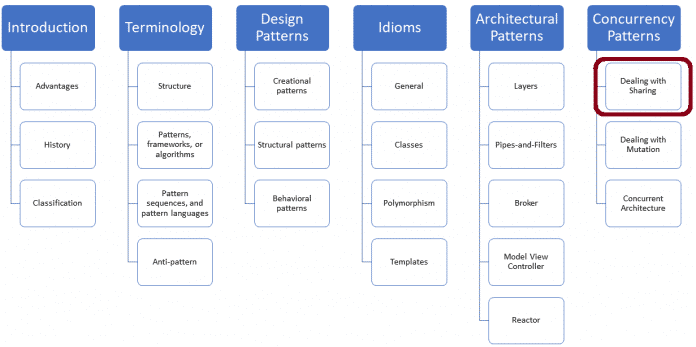

If data is not shared in concurrent applications, data races cannot arise. No sharing means the thread is working with local variables. This can be accomplished by copying the data, using thread-local memory, or transferring a thread’s result to its associated future over a protected data channel.

Rainer Grimm has been working as a software architect, team leader and training manager for many years. He likes to write articles on the programming languages C++, Python and Haskell, but also likes to speak frequently at specialist conferences. On his blog Modernes C++ he deals intensively with his passion for C++.

The patterns in this section are fairly obvious, but I’ll introduce them with a brief explanation for completeness. Let’s start with Copied Value.

Copied Value

When a thread gets its arguments by copy rather than by reference, access to the data does not need to be synchronized. There are no data races and no data lifetime issues.

Data races with references

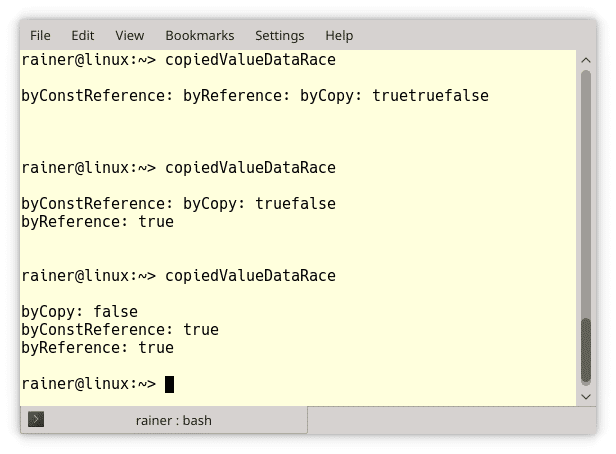

The following program creates three threads. One thread gets its argument by copy, the other by reference, and the last one by constant reference.

// copiedValueDataRace.cpp

#include

#include

#include

#include

using namespace std::chrono_literals;

void byCopy(bool b){

std::this_thread::sleep_for(1ms); // (1)

std::cout << "byCopy: " << b << 'n';

}

void byReference(bool& b){

std::this_thread::sleep_for(1ms); // (2)

std::cout << "byReference: " << b << 'n';

}

void byConstReference(const bool& b){

std::this_thread::sleep_for(1ms); // (3)

std::cout << "byConstReference: " << b << 'n';

}

int main(){

std::cout << std::boolalpha << 'n';

bool shared{false};

std::thread t1(byCopy, shared);

std::thread t2(byReference, std::ref(shared));

std::thread t3(byConstReference, std::cref(shared));

shared = true;

t1.join();

t2.join();

t3.join();

std::cout << 'n';

} Each thread sleeps for one millisecond (1, 2, and 3) before displaying the boolean. Only thread t1 has a local copy of the boolean and therefore has no data race. The output of the program shows that threads t2 and t3 have their Boolean values changed without synchronization.

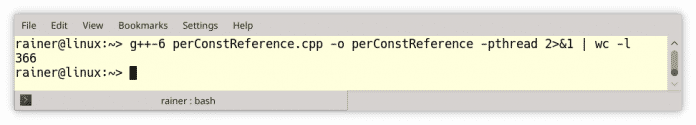

The obvious idea is that thread t3 from the previous example copiedValueDataRace.cpp just through std::thread t3(byConstReference, shared) can be replaced. The program compiles and runs, but what looks like a reference is a copy. The reason for this is that the Type Traits feature std::decay applied to each thread argument. std::decay performs implicit lValue to rValue, array-to-pointer and function-to-pointer conversion of its type T through. In particular, in this case it calls the function std::remove_reference on the data type T on.

The following program perConstReference.cpp uses a non-copyable data type NonCopyableClass.

// perConstReference.cpp

#include

class NonCopyableClass{

public:

// the compiler generated default constructor

NonCopyableClass() = default;

// disallow copying

NonCopyableClass& operator =

(const NonCopyableClass&) = delete;

NonCopyableClass (const NonCopyableClass&) = delete;

};

void perConstReference(const NonCopyableClass& nonCopy){}

int main(){

NonCopyableClass nonCopy; // (1)

perConstReference(nonCopy); // (2)

std::thread t(perConstReference, nonCopy); // (3)

t.join();

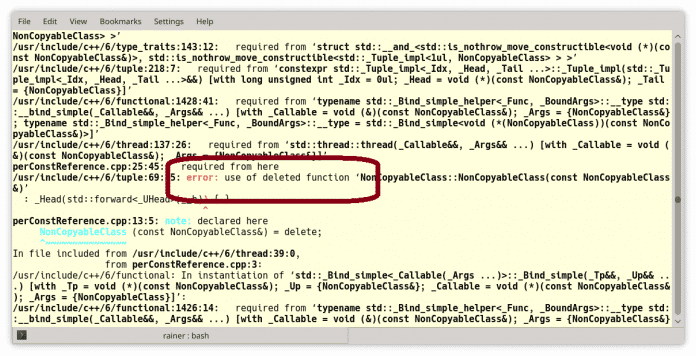

} The object nonCopy (1) cannot be copied. That's fine if I use the function perConstReference meet their Argument nonCopy (2) calls because the function takes its argument by constant reference. If I have the same function in the thread t (3), GCC produces a verbose compiler error with more than 300 lines:

The main part of the error message is in the middle of the screenshot in a red, rounded rectangle: "error: use of deleted function". The class copy constructor NonCopyableClass is not available.

Anyone who borrows something must ensure that the underlying value is still available when it is used.

Reference lifetime issues

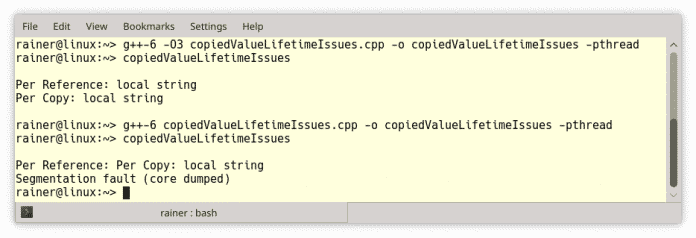

If a thread takes its argument by reference and one Thread detach calls, extreme caution is required. The little program copiedValueLifetimeIssues.cpp has undefined behavior.

// copiedValueLifetimeIssues.cpp

#include

#include

#include

void executeTwoThreads(){ // (1)

const std::string localString("local string"); // (4)

std::thread t1([localString]{

std::cout << "Per Copy: " << localString << 'n';

});

std::thread t2([&localString]{

std::cout << "Per Reference: " << localString << 'n';

});

t1.detach(); // (2)

t2.detach(); // (3)

}

using namespace std::chrono_literals;

int main(){

std::cout << 'n';

executeTwoThreads();

std::this_thread::sleep_for(1s);

std::cout << 'n';

} executeTwoThreads (1) starts two threads. Both threads become detached (2 and 3) and return the local variable localString from (4). The first thread binds the local variable by copy and the second by reference. For simplicity, in both cases I used a lambda expression to bind the arguments. Because the function executeTwoThreads does not wait for the two threads to finish, the thread relates t2 to the local string bound to the lifetime of the calling function. This leads to undefined behavior. Oddly, with GCC, the maximum optimized executable -O3 seems to work, while the non-optimized executable crashes.

Thanks to thread-local storage, a thread can easily work on its data.

Thread local storage

Thread-local storage allows multiple threads to share local storage through a global access point. By using the specifier thread_local a variable becomes a thread-local variable. That means you die thread-local variable without synchronization. Suppose you want the sum of all elements of a vector randValues calculate. This can be easily implemented with a range-based for loop.

unsigned long long sum{};

for (auto n: randValues) sum += n;For a PC with four cores, you turn the sequential program into a concurrent program:

// threadLocallSummation.cpp

#include

#include

#include

#include

#include

#include

constexpr long long size = 10000000;

constexpr long long fir = 2500000;

constexpr long long sec = 5000000;

constexpr long long thi = 7500000;

constexpr long long fou = 10000000;

thread_local unsigned long long tmpSum = 0;

void sumUp(std::atomic& sum,

const std::vector& val,

unsigned long long beg, unsigned long long end) {

for (auto i = beg; i < end; ++i){

tmpSum += val[i];

}

sum.fetch_add(tmpSum);

}

int main(){

std::cout << 'n';

std::vector randValues;

randValues.reserve(size);

std::mt19937 engine;

std::uniform_int_distribution<> uniformDist(1, 10);

for (long long i = 0; i < size; ++i)

randValues.push_back(uniformDist(engine));

std::atomic sum{};

std::thread t1(sumUp, std::ref(sum),

std::ref(randValues), 0, fir);

std::thread t2(sumUp, std::ref(sum),

std::ref(randValues), fir, sec);

std::thread t3(sumUp, std::ref(sum),

std::ref(randValues), sec, thi);

std::thread t4(sumUp, std::ref(sum),

std::ref(randValues), thi, fou);

t1.join();

t2.join();

t3.join();

t4.join();

std::cout << "Result: " << sum << 'n';

std::cout << 'n';

} You wrap the range-based for loop in a function and let each thread hold a quarter of the sum in the thread_local-Variable tmpSum calculate. The line sum.fetch_add(tmpSum) (1) finally sums all the values in the atomic sum. More about thread_local Memory can be read in the article "Thread-local data".

Promises and futures share a protected data channel.

Futures

C++11 offers futures and promises in three variants: std::async, std::packaged_task and the couple std::promise and std::future. The future is a protected placeholder for the value that the promise sets. From a synchronization point of view, the crucial property of a promise/future pair is that a protected data channel connects the two. There are some decisions to be made when implementing a future.

- A future can explicitly express its value with the

get-call query and it - can start the calculation lazy (only on request) or eager (immediately). Just the promise

std::asyncsupports lazy evaluation with a launch policy.

auto lazyOrEager = std::async([]{ return "LazyOrEager"; });

auto lazy = std::async(std::launch::deferred,

[]{ return "Lazy"; });

auto eager = std::async(std::launch::async, []{ return "Eager"; });

lazyOrEager.get();

lazy.get();

eager.get();If I don't specify a launch policy, the system decides whether to launch the job immediately or on demand. With the launch policy std::launch::async a new thread is created and the promise starts working immediately. This is in contrast to the launch policy std::launch::deferred. The call eager.get() starts the promise. Also, the promise is executed on the thread that received the result get requests.

More about futures in C++ can be found in the article "Asynchronous Function Calls".

What's next?

Data races cannot happen if data is not written and read at the same time. In my next article, I will write about patterns that help protect you from change.

(rme)