Listen to the audio version of the article

A premise is needed. The performance of artificial intelligence models is directly influenced by the quantity, but also by the quality and fine-tuning of their parameters, which if well adapted can significantly improve the accuracy, efficiency and reliability of AI systems. In practice, parameters are the complex instructions that a model is able to understand and some LLMs already released have hundreds of billions of them, such as GPT3, which at the time of release had 175 billion.

How many billions of parameters do you need?

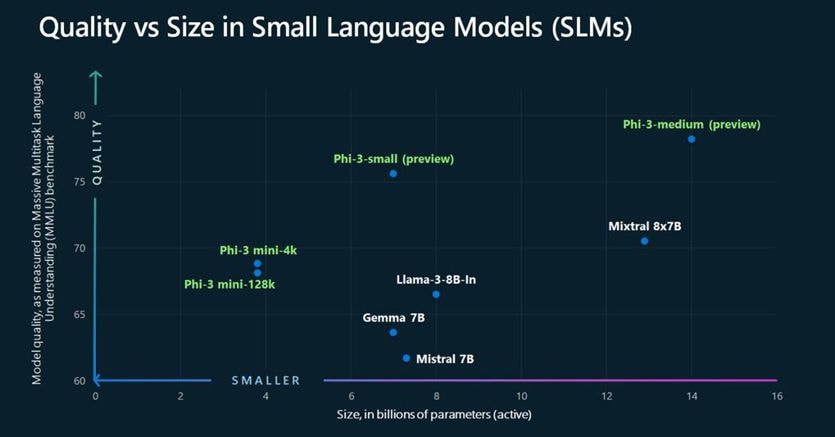

Starting from the need to create a linguistic model of smaller dimensions, more streamlined and less tied to complex hardware on the cloud, the researchers asked themselves how many parameters are really necessary to build a model that offers common sense reasoning and the answer seems to have arrived with the new Phi-3 family of small language models. We start with Phi-3-mini (the first of a series of three small language models which will shortly be followed by Phi-3 Small, with 7 billion parameters and Phi-3 Medium with 14 billion), which even if trained on a Significantly smaller dataset than large language models, it can match the capabilities of other far more powerful LLMs. Eric Boyd, vice president of Microsoft’s Azure AI platform, told The Verge that Phi-3 Mini has the same capabilities as Gpt-3.5 “just in a smaller package”, while guaranteeing a lower cost of ownership and the ability to operate at best even on less powerful devices such as smartphones and laptops. The giant’s engineering team led by Satya Nadella – who presented Phi-2 in December – claims to have adopted a new, more efficient and intelligent approach to AI, with one of the best cost-performance ratios of any other model on the market , outperforming models up to 10 times larger in size. To achieve this, the developers, instead of stuffing the AI with millions of data collected from the network, trained Phi-3 inspired by the way in which children learn from bedtime stories, that is, through words and sentences with a simpler structure that talk about wide-ranging topics. “There aren’t enough children’s books out there, so we took a list of over 3,000 words and asked an LLM to create ‘children’s books’ to train Phi,” says Boyd. In addition to all the other steps related to Responsible AI that are taken before releasing a model, training using synthetic data has allowed Microsoft to add an additional layer of security and reduce the most common problems related to the use of a malicious language like that often displayed by models trained on data from the Internet.

What Phi3-mini promises.

The goal of Microsoft’s new SML is to provide answers based on general knowledge, offering a marked improvement in problem-solving ability and a higher level of reasoning. The advantage of the Phi-3 Mini is that it is compact enough to run locally on a smartphone, but still manages to compete with larger models, even without the need for an internet connection or cloud access, broadening access to AI in places that lack the infrastructure needed to leverage LLMs. A concrete demonstration that even the smallest language models can find their place, especially for applications with limited resources. Thanks to the lower computing requirement, they are more convenient, especially for those companies that cannot afford to have LLM in the cloud. Microsoft has made Phi-3 available on HuggingFace, Ollama, and Azure, allowing you to run the model locally.