Key News (0421~0427)

HuggingFace ChatGPT HuggingChat

HuggingFace open source chat robot HuggingChat, which claims to be comparable to ChatGPT

HuggingFace, a well-known AI start-up and open-source community, has just open-sourced a chat robot, HuggingChat. Common users can use it to chat, write programs, write emails, and even rap lyrics. Developers can use open-source code to create more applications. Or use the API of HuggingFace to connect third-party apps.

The chatbot is modeled on the Open Assistant open source assistant project, initiated by LAION, a German non-profit organization known for creating the training data for the text-to-image AI model Stable Diffusion. The purpose of Open Assistant is to provide a conversational AI that can be executed on general-purpose hardware, and to be able to personalize answers according to individual needs. Currently, the Open Assistant model runs on the basis of Meta’s large-scale language model LLaLM, but the long-term plan is to use various large-scale language models to provide more powerful dialogue functions. (full text)

CancerGPT cancer Synergy

American universities team up to create CancerGPT, which can predict cancer drug synergy

The University of Texas, the University of Massachusetts Amherst, and the University of Texas Health Science Center jointly created a large-scale language model CancerGPT with 124 million parameters, which can predict the synergy of a group of drugs in specific human tissues (Synergy), Even its few-shot prediction capability is comparable to GPT-3 with 175 billion parameters.

Furthermore, years of experiments have proved that a combination of multiple drugs is more effective than a single drug, especially for diseases such as cancer and nervous system disorders. But finding the right combination of drugs remains a challenge. In view of the fact that current drug research focuses on predicting drug synergy, research teams from several universities in the United States decided to use large-scale language models, use the scientific knowledge already possessed by the model, and then use small sample training to predict drug synergy. After testing, the team found that CancerGPT can achieve a very high accuracy even under zero-sample conditions, laying the groundwork for general biomedical AI. (full text)

Google Bard debug

Google Bard can also help with coding and debugging

Google continues to enhance the chatbot Bard, and recently announced that Bard can help developers write code and debug. In March of this year, Google opened the preview version to a small number of users in the United States and the United Kingdom to apply for a trial of Bard. Now, in addition to drafting articles, invitations, sorting out meeting to-do items, and answering user questions, it also adds new development assistance functions, such as The ability to generate code, debug code, and provide explanations.

According to Google, Bard supports more than 20 programming languages including C++, Go, Java, Javascript, Python and Typescript. Developers can export Python code to Google Colab, an online Python editor, without copying and pasting. In addition, Bard can also assist in writing the functions of the Google Sheets spreadsheet, and even correct the code written by himself in the debugging part of the program. (full text)

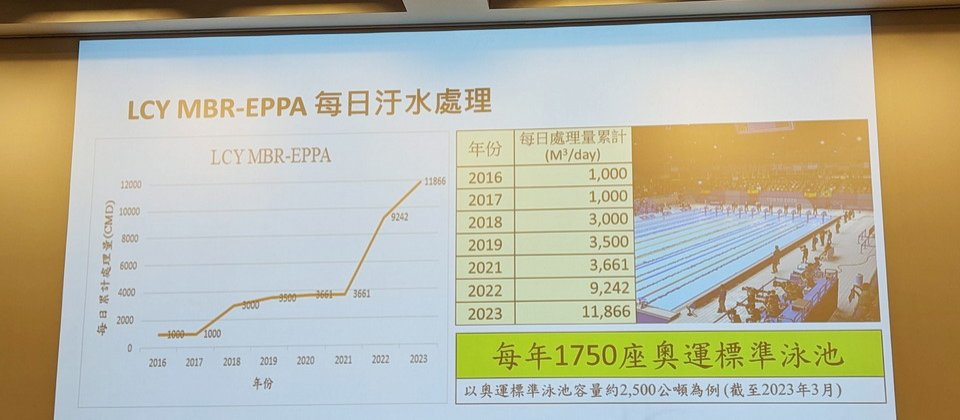

Li Changrong chemical industry sewage treatment

Taiwan’s time-honored chemical plant relies on AI to process 3 times more sewage per day

Li Changrong Chemical, which has been established for nearly 60 years, recently revealed how to use AI to improve the efficiency of water recycling in the process, reaching an annual saving of 4.37 million metric tons of factory water, which is equivalent to 1,750 Olympic pools. Li Changrong Chemical mainly produces polypropylene, methanol, solvents and thermoplastic rubber commonly used in medical, industrial and household products. During the production process, many links need to consume a lot of water resources. In order to save water, they introduced the MBR wastewater treatment system early on to reuse it in the manufacturing process.

However, the treatment of factory process wastewater relies on a group of active bacteria growing in the biological purification tank to treat the microorganisms in the sewage. In order to make it easier to grow bacteria, Li Changrong Chemical IT team built an AI domestication control system, which can grasp the activity status of bacteria and decide when to aerate according to the environmental data in the bacteria aeration tank, such as dissolved oxygen value , so as to increase the oxygen content in the water, maintain the activity of the bacteria in the best state, and improve the quality of sewage treatment. They also found that after the introduction of this system, the efficiency of process wastewater treatment has been greatly improved, from an average of 3,661 cubic meters per day in 2021 to 11,866 cubic meters per day in 2023, and an annual saving of 4.37 million has been achieved so far. metric tons of factory water. (full text)

Information Security Generative AI intelligence analysis

Google also uses generative AI to enhance its own information security solutions

Google has updated its own security solutions, integrating the large-scale language model Sec-PaLM in the three major information security services such as Security AI Workbench, VirusTotal Code Insight and Mandiant Breach Analytics for breaking latest news, to add new functions such as intelligence analysis.

For example, in the Security AI Workbench platform, Sec-PaLM is used to provide information such as threat posture, Mandiant vulnerabilities, malware, threat indicators, and threat actor basic information. Furthermore, users can apply for a preview of the security solution VirusTotal Code Insight to enjoy the malicious script behavior analysis and interpretation functions supported by Sec-PaLM. Mandiant Breach Analytics for breaking latest news also integrates Sec-PaLM, allowing users to search for security incidents in natural language without learning new grammar, and further analyze the search results, or quickly create scanning tasks.

Not only that, Google Security Command Center also uses Sec-PaLM to convert complex attack graphs into human-readable attack explanations, explaining potentially affected assets and suggested solutions, while generating insights into security, compliance, and privacy. Risk Summary. (full text)

Modena quantum computer new drug discovery

Moderna pharmaceutical company to use generative AI and quantum computing to explore new drugs

Moderna, a pharmaceutical company in the United States, recently announced that it will use IBM’s generative AI and quantum computing technologies to strengthen mRNA research and drug development. Further, Moderna will use IBM’s basic model, MoLFormer, to predict chemical molecular properties and help the research team understand the properties of mRNA drugs.

Among them, MoLFormer is a large-scale chemical language model that can be trained based on the activities of small molecules. The team hopes to use this model to optimize the lipid nanoparticles that protect mRNA, and use generative AI to improve the formulation and design the safest and most effective mRNA drug. At the same time, Moderna will also join the IBM Quantum Accelerator Project and IBM Quantum Network to use IBM Quantum Computing System for biotechnology research. (full text)

Common AI DeepMind Google

Google consolidates AI research and development department, specializing in general AI

Google CEO Sundar Pichai announced the merger of Alphabet subsidiary DeepMind and the Google Brain department of Google Research Institute to form a new business group Google DeepMind to fully develop AI technology. This new business group will be led by DeepMind CEO Demis Hassabis, who will lead the development of responsible general-purpose AI systems, while Google Brain leader Jeff Dean will be promoted to Google Research and DeepMind Chief Scientist, responsible for leading key AI technology projects, such as Modal AI model development.

In addition, Google DeepMind will integrate the AI research and development achievements of Google Brain and DeepMind, including AlphaGo, Transformer, text representation model word2vec, audio waveform depth generation model WaveNet, protein structure prediction model AlphaFold, sequence-to-sequence model, distillation technology, deep reinforcement learning, Decentralized systems and software frameworks such as TensorFlow and JAX for representing, training and deploying large ML models. (full text)

Stability AI Generative AI large language model

Stability AI open source billions of parameters LLM model, although small but high performance

AI start-up company Stability AI has recently open sourced a large language model (LLM) StableLM that can generate text and code, including models with 3 billion parameters and 7 billion parameter versions, and will add 15 billion and 65 billion parameter versions later. Stability AI said that StableLM, as a basic model, can generate text and code, and derive a variety of applications. It proves that small models can also produce high-efficiency outputs as long as they are properly trained.

StableLM’s training data comes from The Pile’s latest experimental data set, which contains 1.5 trillion tokens, and the data set is quite rich. Therefore, even though the StableLM model has only 3 billion to 7 billion parameters, it can provide extremely high performance in dialogue and programming tasks. In addition, Stability AI will also open source research models that have been fine-tuned by instructions. This group of models will be trained using the comprehensive data sets of five chatbots that have been open sourced recently, including Alpaca, GPT4AII, Dolly, ShareGPT, and HH. (full text)

Image source / University of Texas, Google

Photography / Yu Zhihao

AI recent news

1. Meta open-sources Animated Drawings, an AI project that can turn sketches into animations

2. Amazon launched Bedrock, a cloud-based AI model platform, and Titan, a large-scale language model

Source: Organized by iThome, April 2023