Listen to the audio version of the article

«But is this image true or false?». We feed him a photo created with artificial intelligence reproducing a fake attack on the Pentagon. A well-known fake news. But he shouldn’t be able to know, because he doesn’t read newspapers. This is Llava, the new open source “multimodal” chatbot.

And yet: the answer surprises us.

“This image is a fake photo of a large cloud of smoke, possibly created for artistic or entertainment purposes.”

How do you know it’s fake? We ask him.

“There are some clues that suggest the image is fake. First, the smoke cloud appears to be too large and unrealistic in size, which is not typical of natural events such as wildfires or volcanic eruptions. Secondly, the smoke cloud is described as being in front of a building, but the details of the building are not clearly visible, which could indicate that it is a digitally manipulated background. Finally, the overall appearance of the smoke cloud and the surrounding environment seems artificial, which further supports the hypothesis that it is a fake photo.”

Perfect: useful and even informative.

What is Llava and where is it found

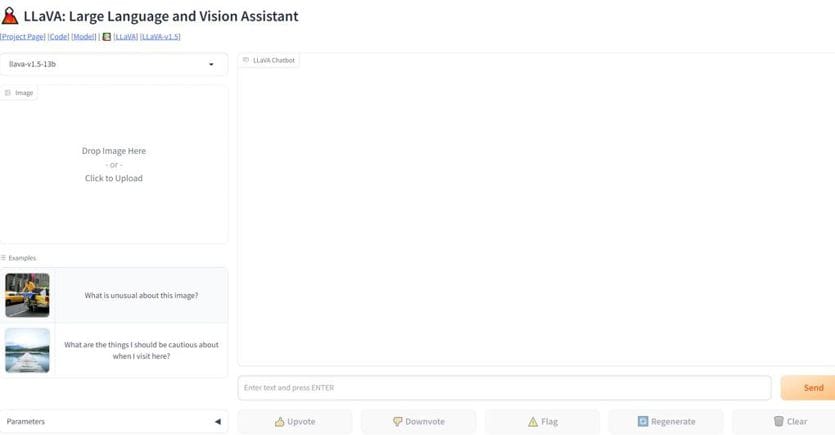

Llava stands for Large Language and Vision Assistant, a large multimodal model trained by a group of researchers. Connect a vision encoder and a LLM (“large language”) model for general visual and linguistic understanding. You can try it for free here.

What Llava can do

We feed Llava an image and ask him things – for now in English only – combined with that. Furthermore, in our test we saw responses in just a few seconds, every time. There are three possible functions: recognizing objects or the content of an image; OCR, i.e. the reading of words present in the image; image analysis where the model connects to a knowledge base and can therefore give us advice and make considerations. Like the one about fake news. Note that the system is interactive, just like ChatGpt and similar: we can do a back and forth while continuing the conversation, asking for other related things. Having a text read and translated is perhaps not very useful . Bixby from Samsung already does it, Google Lens from the smartphone and it is certainly more direct and convenient. Image analysis is much more interesting. A typical example is to show him a photo of an open fridge and then ask him something like this: “What recipes would you recommend with these ingredients, for a dinner with three people, one of whom is vegetarian?” Answer in our test: “Here’s a suggestion for the dinner menu: 1. Vegetarian Pasta Salad: – Cook the pasta (spaghetti, penne or any other type) and set aside. – In a separate bowl, mix the cherry tomatoes, sliced peppers and sliced cucumbers. – In a third bowl, mix the cooked pasta, vegetables and a dressing made of olive oil, balsamic vinegar, salt and pepper. – Optionally add some grated parmesan to flavor everything. 2. Grilled chicken or vegetable skewers…”. Two more examples follow until “This dish offers a variety of flavors and textures that satisfy different tastes and dietary preferences. The vegetarian pasta salad is a light and refreshing option, while the”. The sentence stops suddenly, a typical error in these language models. We can also give him a shirt label – or something similar – and ask what those symbols mean or, more directly, if it is possible to iron or bleach it based on those symbols. Here Llava didn’t fare so well. “Can I whiten this shirt with this label?” “The presence of ironing instructions on the label indicates that the fabric is suitable for ironing (and what does that have to do with it? Ed.). However, it is essential to follow the instructions on the label to ensure that the fabric is ironed correctly and to maintain its quality and appearance. Regarding bleaching, it is not possible to determine whether the fabric can be bleached based on the information provided.” Etc. General informations. There was actually a symbol that said do not bleach. We tried the same image on Bing Chat – this is also now multimodal – and the response was better. “Based on the symbols on the label, it appears that the shirt should not be bleached. The bleaching symbol is a triangle and the presence of a line through it indicates that bleaching is not recommended.”Good; However, it took a few dozen seconds and crashed a couple of times. Llava is certainly more immediate; perhaps the (much) lower number of users has an impact. Last test, “medical”. We went to Llava from our phone and took a photo, directly through the interface, of a nasal spray we kept on our desk. We asked: what it is, how to use it, the dosage of it. Answer: “The image shows a bottle of Care Nasal Spray, a nasal decongestant. The use of this nasal spray is to relieve nasal congestion and provide temporary relief from cold and flu symptoms. The dosage of Care nasal spray is to spray it into the nostrils as needed, usually two or three times a day. It is important to follow the instructions on the package and consult a healthcare professional for any specific advice on dosage or use.”

Overall opinion

Many experts note that the multimodal bot is perhaps the future of these systems. Moreover, only in the last year have Bing, Bard and ChatGpt4V arrived in addition to Llava. Everyone has strong and weak points. In general, they show immature, but very interesting products. In fact, they can surprise with their usefulness and effectiveness or even suddenly disappoint. We are probably still at the beginning of a technology that will find its widespread application as a system for analyzing multimedia data, for personal or business reasons.