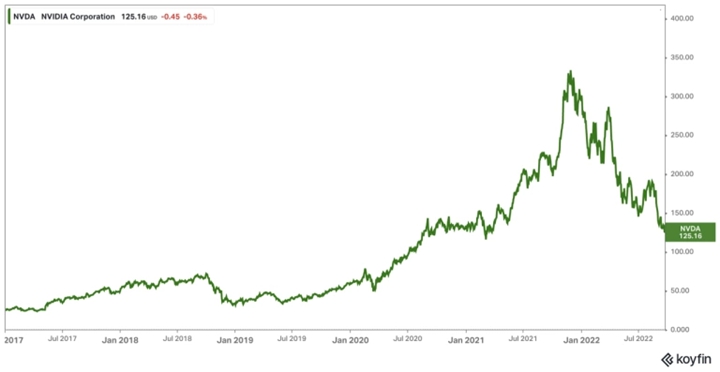

NVIDIA’s investors have been in the valley before:

However, this chart is not the situation of NVIDIA in the last two years, but its stock price trend from early 2017 to early 2019; the following is its stock price trend from 2017 to today:

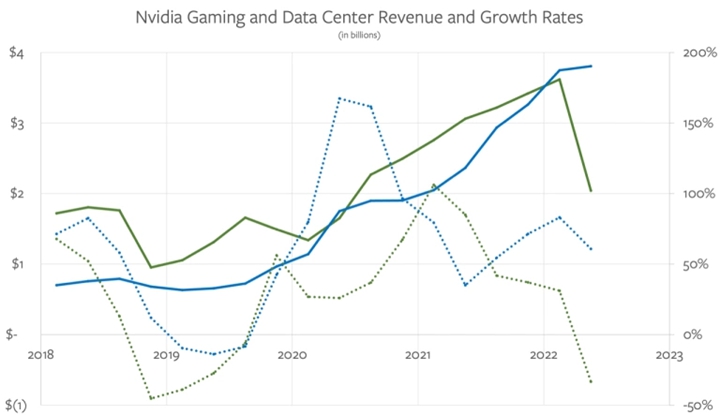

Three big things have happened in NVIDIA’s business over the past three years that have pushed their stock price to unprecedented heights:

Three big things have happened in NVIDIA’s business over the past three years that have pushed their stock price to unprecedented heights:

- The outbreak has led to a surge in purchases of personal computers, especially gaming graphics cards, as customers have to buy new computers and have nowhere to spend their discretionary income, so they can only pursue a better gaming experience.

- Machine learning applications are exploding in hyperscalers, which are trained on NVIDIA GPUs.

- The cryptocurrency bubble caused a surge in demand for NVIDIA chips, as making money (mining) depended on solving Ethereum proof-of-work (POW).

Cryptocurrency trends are more of a cliff than a valley: Ethereum’s successful switch to a proof-of-stake (POS) model turned an entire mining farm built out of thousands of NVIDIA GPUs into a mine overnight Worth nothing; given that the other major proof-of-work cryptocurrency network, Bitcoin, is mined almost entirely with custom-designed chips, all these old GPUs are flooding the second-hand market. It’s been a particularly bad time for NVIDIA, as the company’s previous efforts to meet demand for its 3000-series chips are paying off just as the pandemic-induced buying spree has ended. Needless to say, too much new inventory combined with too much used inventory is making the company’s financial performance abysmal, especially since NVIDIA is still planning to clear the way for the new series:

In an interview last week, NVIDIA CEO Jensen Huang admitted that the company hadn’t foreseen this:

In an interview last week, NVIDIA CEO Jensen Huang admitted that the company hadn’t foreseen this:

I don’t think we could have foreseen this. I don’t think I’d do anything different, but what I’ve learned from the previous example is that once it ends up happening to you, it’s all about swallowing the bitter fruit and letting it go… We got through two Bad quarters, in the context of the company, two bad quarters would really frustrate all investors and make it tough for all employees.

NVIDIA has encountered this situation before.

Just deal with the problem, don’t get too emotional, understand how the problem came out, and keep the company as agile as possible. But once a fait accompli, you can only make objective, hard decisions. We take care of our partners, we take care of our access, and we make sure everyone has enough time. We delayed the release of Ada to ensure that all parties had enough time to reprice the product, so that even in the context of Ada, even if Ada came out, the repriced product was actually a very good value. I think we’ve taken care of all parties as best we can, which has resulted in two pretty bad quarters. But I think in the big picture, we’ll be back soon, so I think maybe that’s a lesson from the past.

That may be a stretch; earlier this year, analysts such as Tae Kim and Doug O’Laughlin predicted a slump in NVIDIA’s stock price, though those predictions were not a good idea given that NVIDIA had already ordered an additional batch of 3000-series GPUs in the middle of the pandemic. It’s probably too late to open the perfect storm of slowing PC sales and the transition from Ethereum (from proof-of-work) (Huang also pointed to increased production lead times for chips as a big reason why NVIDIA got it so wrong).

More worryingly for NVIDIA, while inventory and ethereum issues were the biggest drivers of what had been a “pretty bad quarter,” it wasn’t the only trough its gaming business was experiencing. I think of John Bunyan’s Pilgrim’s Progress:

But in the Valley of Humiliation, the poor believer had had enough; for he had not gone far when he saw a vicious enemy called Apollyon approaching in the field.

This devil is called the inventory problem; in the story, the believer defeated the devil, and NVIDIA can finally overcome the inventory problem.

After walking through this valley, there is another valley called the Valley of the Shadow of Death; believers must pass through here, because the road to the kingdom of heaven passes through it. This valley is a very cold place. The prophet Jeremiah described it this way: “A wilderness, a desert with deep pits, a land of drought and the shadow of death, a land that no one (except the believers) passes through and no one lives in.”

What was astounding about NVIDIA’s keynote on GTC last week was how well the parable fits into NVIDIA’s ambitions: The company is on a seemingly rather lonely journey to define the future of gaming, and it’s unclear what the rest of the industry will do. Will not follow up or when. In addition, the company is pursuing an equally bold strategy in the data center and metaverse: in all three directions, NVIDIA is pursuing higher heights than those it has achieved in the past two years, but the path is different. is surprisingly uncertain.

Games in the Valley: Ray Tracing and Artificial Intelligence

For a long time, the presentation of 3D games has relied on a series of skills, especially lighting skills. First, the game determines what you can see (i.e. it’s useless to draw an object that is occluded by other objects); then, you have to apply the suitable texture. Finally, you have to light from a predetermined light source location and then add shadows to the object. Finally, the entire scene is converted into pixels one by one and drawn on the 2D screen; this process is called rasterization.

Ray tracing treats light completely differently: instead of lighting with a predetermined light source and applying shadow mapping, ray tracing comes from your eyes (or more precisely, the camera lens through which you look at the scene) start. It then traces every pixel of the screen that the eye is looking at, then (based on the type of object the pixel represents) refracts the pixel and continues to trace the ray until it hits the light source (then it’s lighting) , or discard the ray. This treatment produces very realistic lighting effects, especially reflections and shadows. Take a look at this image from PC Magazine below:

Let’s take a look at how ray tracing can improve the visuals of games. I took a few screenshots of Square Enix’s PC version of Tomb Raider: Shadows, which supports ray-traced shadows on Nvidia GeForce RTX graphics cards. Take a closer look at the shadows on the ground.

[…]Raytraced shadows are softer and more realistic than the rougher rasterized version. The darkness depends on how much light is blocked by the object, and even the shadows themselves are light and dark, and rasterization seems to give each object a very hard edge. The rasterized shadows look fine, but after playing games with ray traced shadows, it’s hard to go back.

NVIDIA first announced API support for ray tracing in 2009; however, very few games use this technique because of its high computational cost (movie CGI does use ray tracing; It takes hours or even days; but the game’s calculations have to be real-time). So in 2018, NVIDIA launched the GeForce 2000 series graphics cards (hence the name “RTX”) that introduced dedicated ray tracing hardware. AMD went the other way, adding ray tracing to its core shader unit (while also doing rasterization); AMD’s was slower than NVIDIA’s hardware-only solution, but it worked, and Importantly, since AMD makes graphics cards for both the PS5 and Xbox, that means the entire industry now supports ray tracing. More and more games will support ray tracing in the future, but most applications are still fairly limited due to performance issues.

An important point about ray tracing, however, is that lighting effects are computed dynamically, rather than relying on light and shadow maps, so developers get lighting effects “for free”. A game or 3D environment that relies entirely on ray tracing should be easier and cheaper to develop; more importantly, it means that the environment can change in dynamic ways that the developer never expected, and is different from most laboriously prefabricated by hand. The lighting effect is more realistic than that of the environment.

This is especially intriguing for two emerging use cases: Minecraft-like simulation games. With ray tracing technology, the dream of having highly detailed 3D worlds that are dynamically constructed and perfectly lit is getting closer and closer to reality. Future games can go even further: NVIDIA’s keynote begins with a game called RacerX, where every part of the game, including objects, is fully simulated; in-game physics also take advantage of the same type of lighting operation.

The second scenario is the future of AI-generated content that I discussed in DALL-E, the Metaverse, and Zero Marginal Cost Content. All of the textures I mentioned above are currently hand drawn; as graphics capabilities (mostly driven by NVIDIA) increase, so will the development costs of new games due to the need to create high-res assets. So it is conceivable that in the future it is possible for assets to be created completely automatically, in real-time, and then to be properly lit through ray tracing.

However, NVIDIA is already using AI to calculate images: the company has also released version 3 of its Deep Learning Super Sampling (DLSS) technology. This technology predicts and budgets frames, which means those frames need no computation at all (previous versions of DLSS required predicting and budgeting for individual pixels). In addition, just like ray tracing technology, NVIDIA also uses dedicated hardware to make DLSS perform better. These new practices, paired with the dedicated cores of NVIDIA GPUs, make NVIDIA ideally suited to establish a new paradigm for gaming and immersive 3D experiences such as virtual worlds.

But here’s the thing: all this specialized hardware comes at a price. NVIDIA’s new GPU is a big chip — the top-of-the-line AD102, sold as the RTX 4090, a fully integrated system-on-a-chip built on TSMC’s N4 process and measuring 608.4 mm²; by comparison, AMD’s upcoming The top-of-the-line Navi 31 chip built into the RDNA 3 graphics card series is a small chip (chiplet) design, using the TSMC N5 process, with a size of 308 square millimeters, plus six 37.5 square millimeters of memory using the TSMC N6 process wafer. In short, NVIDIA’s wafer is much larger (which means more expensive), and it uses a slightly more modern process flow (which may cost more). Dylan Patel explained the potential impact of this practice at SemiAnalysis:

In short, significant die cost savings by forgoing AI and ray tracing fixed-function acceleration in favor of smaller die in advanced packaging. Advanced packaging costs for AMD’s RDNA 3 N31 and N32 GPUs have risen significantly, but small fan-out RDL packages are still very cheap relative to wafer and yield costs. At the end of the day, AMD’s packaging cost increase is not worth the savings compared to the cost savings by splitting the memory controller with the infinite cache, using the cheaper N6 instead of the N5, and higher yields Mentioned…for the first time in nearly a decade, NVIDIA has a worse cost structure for traditional rasterized gaming performance.

This is the valley NVIDIA is walking into. Due to the high price of the 4000 series, after NVIDIA’s keynote speech, especially when the details on NVIDIA’s website indicated that one of the second-level chips released by NVIDIA was actually more similar to the third-level chip, gamers were replaced. Immediately began to fight against it, they suspected that NVIDIA was playing a marketing game to cover up the price hike. Nvidia’s graphics card is perhaps the best performing and undoubtedly the best for future ray tracing and AI-generated content, but at the cost of not providing the best value for today’s games. Getting to the heights of pure analog virtual worlds will take a generation to charge for features that most gamers don’t care about yet.

AI in the Valley: Systems, Not Chips

One of the reasons for optimism about NVIDIA’s approach to gaming is that the company made a similar bet on the future when it invented shaders. I once explained shaders after last year’s GTC:

NVIDIA first rose to fame with its Riva and TNT series of graphics cards (hardcoded to accelerate 3D libraries like Microsoft’s Direct3D):

However, the GeForce series can be fully programmed through a computer program called a “shader”. This means that even after being manufactured, GeForce cards can be improved by developing new shaders (for example to support newer versions of Direct3D).

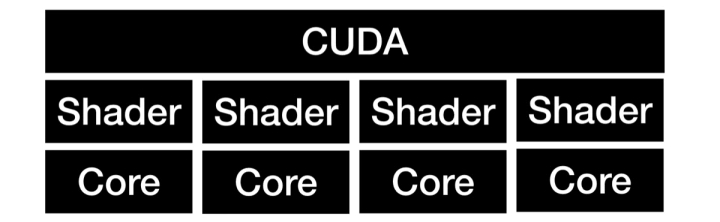

[…]More importantly, shaders don’t have to be computational graphics; any kind of software — ideally any simple computational program that can run in parallel — can be programmed as a shader; the trick is figuring out how to write this This is where CUDA comes in. In my 2020 article on NVIDIA’s dream of integration, I explained:

This increased level of abstraction means the underlying graphics processing unit can be simpler, which means the graphics die can have more GPUs. For example, NVIDIA’s just-released state-of-the-art version of the GeForce RTX 30 series has an incredible 10,496 cores.

This level of scalability makes sense for graphics cards because graphics processing is completely parallel: a screen can be divided into any number of sections, each of which can operate independently at the same time. This means that performance can be scaled horizontally, that is, each additional core increases performance. However, it turns out that the types of operations that can be fully parallelized are not limited to graphics…

So NVIDIA has changed from a modular component manufacturer to a software and hardware integration manufacturer. The modular part refers to its graphics card, and the hardware-software integration refers to its CUDA (Unified Computing Device Architecture) platform. The CUDA platform allows programmers to access the parallel processing power of NVIDIA graphics cards in multiple languages without having to know how to program graphics.

There are now three levels of Nvidia’s “technology stack”:

Much of this is out of desperation. In an interview with us last spring, Jensen Huang explained that the introduction of shaders, which he sees as critical to the company’s future, nearly killed the company:

The disadvantage of programmability is that it is less efficient. As I mentioned before, the fixed-function stuff is more efficient. Anything that is programmable, anything that can do more than one thing by definition, is an unnecessary burden to accomplish any particular task, so the question here is “when should we do this?” thing (make your own stuff programmable)?” One of the things that inspired us at the time was that everything looked like OpenGL Flight Simulator. Everything can be seen as blurred textures and trilinear interpolation to refine textures, everything has no life, but we feel that if you don’t give life to the medium, you can’t let artists create different games, different genres, Tell a different story, and eventually the medium will cease to exist. At the same time, we also wanted to make a more programmable palette that games and artists could do awesome things with, and we were driven by that ambition. There’s also a motive urging us to do this, and that is that we won’t go out of business even if graphics cards are commoditized. So when those considerations got to a certain point, we started doing programmable shaders, so I think the motivation for that was pretty clear. But then we were punished in ways we didn’t expect.

What kind of punishment?

The penalty comes so suddenly, all our expectations of programmability, all the overhead we do for the future, in all unnecessary features, is a penalty because the current application doesn’t reap the benefits of it. Unless new applications come out, otherwise, our chips will appear too expensive, and now the market competition is very fierce.

NVIDIA has survived because their direct acceleration is still the best. In the long run, NVIDIA will still thrive because they have developed an entire CUDA infrastructure to take advantage of shaders. This is where data center growth comes from; Jensen Huang explains:

From the day you become a processor company, you have to internalize this: this processor architecture is brand new. There has never been a programmable pixel shader like this, or a programmable GPU processor like this, and a programming model like this, so we had to internalize this. You have to internalize that this is a new programming model, and you have to do everything about being a processor company or a computing platform company. So we have to build a compiler team, we have to think about making SDKs, we have to think about building our own libraries, and we have to engage with developers, advertise our architecture, and help people realize the benefits of it, and if that doesn’t work, even you You have to develop your own new library so that people can easily port their applications to our library and see the benefits.

The first reason to repeat this story is to point out the similarities between the cost of shader complexity and the cost of ray tracing and artificial intelligence in current games; the second is to pay attention to this, NVIDIA The approach to the problem has always been to do everything on your own. Back then, it meant developing CUDA to program those shaders; today, it means developing entire systems for artificial intelligence.

In his keynote speech last week, Jen-Hsun Huang said:

NVIDIA is committed to advancing science and industry through accelerated computing. The days of getting better performance without speeding up are over. Using software without acceleration support can only make up for its lack of performance and scalability at a high cost. NVIDIA has been focusing on this field for nearly 30 years. As an expert in accelerating software and expanding computing, NVIDIA has provided a million-fold acceleration, far exceeding Moore’s Law.

Accelerating computing is a full-stack challenge. A deep understanding of the problem domain is required and optimizations are made at each level of computation and the three chips of CPU, GPU, and DPU. Expansion across multiple GPUs and multiple nodes is a challenge for data center scale. To uniformly allocate network, storage and computing resources, from PCs to supercomputing centers and enterprise data centers, from the cloud to the edge, developers and customers want to be able to Many places run their own software. Different applications want to behave differently in different locations.

Today, we’re going to talk about accelerated computing with a look at the full stack. We’ll introduce new wafers and explain how they can unlock more performance with limited transistors, and I’ll introduce new libraries and how these libraries can accelerate critical tasks in science and industry, as well as new domain-specific frameworks , which facilitates the development of more performant and easy-to-deploy software. And new platforms that allow you to deploy software safely, with confidence, and gain orders of magnitude improvement.

In Huang’s view, fast chips alone are not enough for future workloads: that’s why NVIDIA is building an entire data center with all its equipment. But again, in a future where every company needs accelerated computing, NVIDIA’s purpose-built data center—NVIDIA’s Celestial City—is a stark contrast to the status quo, which is In the data center, the biggest users of NVIDIA chips are hyperscalers who have their own systems in place.

For example, companies like Meta didn’t need NVIDIA’s network; they invented their own. These companies do need massively parallelizable chips to train their machine learning algorithms, which means they have to pay NVIDIA to contribute its high profits. No wonder Meta, like Google before it, is developing its own chips.

This is a process that all large companies are likely to go through: they don’t need NVIDIA systems, they need chips that can meet their requirements and run their systems. That’s why NVIDIA is so committed to democratizing AI and accelerated computing: Long-term, the key to scaling is building systems for everyone but the biggest players. The trick to crossing the valley is seeing the ecosystem grow before NVIDIA’s current big customers stop buying NVIDIA’s expensive chips. Jen-Hsun Huang had foreseen that 3D accelerators would be commoditized, so he made the leap with shaders; you can sense that he has the same fear of chips, so he is now making the leap to make systems.

Metaverse in the Valley: Omniverse Nucleus

In an interview last spring, I asked Jen-Hsun Huang if NVIDIA would do its own cloud services;

If we were to do a service, in addition to what we did ourselves (if we had to do it ourselves), the service would run on GPUs all over the world, on every cloud. One of the rules our company has made is not to waste company resources doing what is already there. If something already exists, say something like an x86 CPU, we just use it. Or if something already exists, we choose to work with it because we don’t want to waste our scarce resources on it. So, if something already exists in the cloud, we’ll definitely just use that thing, or let that thing do it, and that’s better. But if doing something that works for us but doesn’t make sense for them, we’ll ask them to do it; if someone else doesn’t want to, then we might decide to do it ourselves. We are very selective about what we do, but we are very firm about what other people have done, which is not to do it.

As it turns out, there’s one thing no one else wants to do, and that’s to build a common library for 3D objects that NVIDIA calls the Omniverse. These objects can be super-detailed millimeter-precision objects used in manufacturing or supply chains, or fantasy objects and buildings generated for virtual worlds; according to Jen-Hsun Huang, anyone developing on Omniverse Nucleus can These objects can be used.

The Celestial City here is a world of 3D experiences available across industries and entertainment — an Omniverse of the Metaverse, if you will, all connected to NVIDIA’s cloud services — and ambitious enough Kerber blushes! The valley seems to be longer and darker for the same reason: not only do you need to create all these assets and the 3D experience, but you need to convince the entire market of its usefulness and necessity. Building a cloud service for a world that doesn’t exist is all about reaching heights that are still invisible.

Jen-Hsun Huang and NVIDIA’s ambitions are beyond question, although some may question the wisdom of traversing three valleys at once; aside from that perfect storm in the gaming industry, their stocks themselves still can’t get out of the valley, perhaps Also reasonable.

It’s worth considering, though, that the number one reason NVIDIA customers (whether consumer or enterprise) are frustrated with the company is price: NVIDIA’s GPUs are expensive, and the company’s margins (except in recent quarters) are very high. However, in NVIDIA’s case, its pricing power comes directly from NVIDIA’s own innovations, both in terms of absolute performance for given workloads, and in its investment in the CUDA ecosystem, the amount of money it creates for entirely new workloads. on the tool.

In other words, NVIDIA has earned the right to be hated for taking the kind of risk in the past for what it is doing now. As an example, let’s say the future expectation for all games is not just ray tracing, but full simulation of all particles: NVIDIA’s investment in hardware will mean it will dominate that era as much as rasterization . Likewise, if AI applications are democratized and available to all enterprises, not just hyperscalers, then NVIDIA’s value proposition will be to master the entire long tail. Furthermore, if we enter the world of the metaverse, NVIDIA has a lead not only in infrastructure, but also in the library of essential objects necessary to make that world a reality (which, of course, will be in The AI-generated space is lit with ray tracing), making NVIDIA the most important infrastructure in the field.

Not all of these bets will pay off; however, I do appreciate the boldness of the vision, and I wouldn’t be jealous if NVIDIA could make a decent profit in the future through the valley to the heavenly city.