In recent years, AI technology has developed rapidly. With the maturity of deep learning frameworks represented by Pytorch and TensorFlow, the training process of AI models has become relatively clear and mature. However, the emergence of pre-trained models and subsequent series of super-large models has made the necessity of manual training and custom models less and less for most AI developers and enterprises. As the public’s expectations for AI models return to rationality, the competition among enterprises in the field of AI has gradually shifted from “academic competition” to “application competition”.

This year, this phenomenon is particularly evident. The maturity of the “text-generated-picture” model represented by diffusion models has set off a wave of AI painting, and the concept of AIGC has become popular. Towards the end of this year, ChatGPT set off a heated discussion on man-machine dialogue and even the subversion of search engine forms. AI is no longer an academic concept competition, but has begun to become a real application and tool. Join Increased productivity.

Even, the ability of AI to paint is enough to win some competition awards——

As shown in the picture, “Space Opera House” was produced by Jason Allen after nearly a thousand attempts using the AI drawing software MidJourney, and won the first prize at the Colorado State Fair.

However, the efficiency of AI technology at the application development level faces many challenges.

In enterprise AI applications, it is inevitable to consider how to implement the model into the production environment, how to ensure the availability and reliability of the model after deployment, and how to monitor and maintain the performance of the model. These challenges require enterprises to have a complete set of AI application development processes and be equipped with corresponding tools and resources.

Enterprises with such a complete tool chain are often very few giants, and because the tool chain is not mature enough, they are often complained by employees.

These problems often appear in the process of deploying the model to the production environment after the model training is completed. The tool chain for model deployment is relatively long, and the compatibility issues of upstream and downstream tools are emerging one after another, and the extensive use of open source tools by enterprises is likely to cause security problems. These scattered problems have greatly restricted the productivity of enterprises developing AI applications.

Give a specific example.

TensorRT, an inference acceleration framework, is often used when we convert the model from the training checkpoint to the forward inference ONNX model ready for deployment. However, due to the wide variety of AI tasks, we often inevitably change the model structure. After using some uncommon operands (op), it is easy to have the problem that the TensorRT op does not support it. For this reason, we It is necessary to write a plugin and make a series of changes and adaptations, which greatly hinders the progress of deployment and launch.

Even if the problem of operator adaptation is not encountered, because the reasoning phase often requires high immediacy and concurrency of calculations, in order to save deployment resources, enterprises often reduce model accuracy—FP16 quantization or even INT8 quantization, etc. In the process of calculating the accuracy, it is often found that the model has lost a lot of effect, and it may be necessary to go back and add some skills to retrain the model.

In addition, since AI models often need to process sensitive data, data privacy and security issues also need to be considered during the development of AI applications. Enterprises need to establish a reasonable data protection mechanism to ensure that sensitive information will not be leaked during model training and use.

In general, enterprises face many challenges in AI application development. At present, there is an increasingly urgent need for a tool chain to help enterprises build and deploy AI applications more efficiently and safely. This set of tool chains includes model deployment tools, model management platforms, model monitoring tools, data privacy protection tools, and more. These tools can help companies better manage and control the AI application development process and ensure the availability and reliability of AI applications.

Fortunately, it’s here.

NVIDIA AI Enterprise 3.0

A few days ago, NVIDIA announced AI Enterprise 3.0 (NVAIE 3.0 for short), an AI development platform that can be called an operating system level.

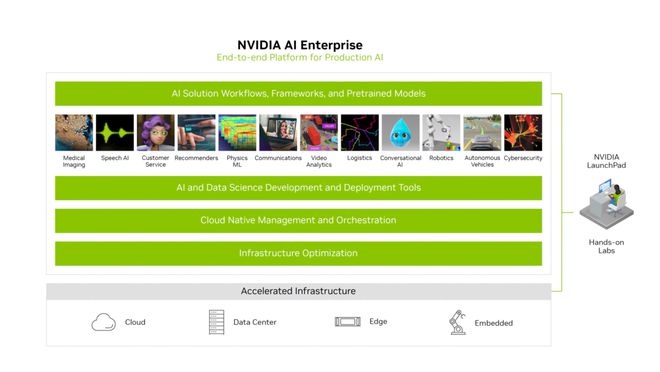

It can be seen that this is not a traditional deep learning framework like TensorFlow and Pytorch, but a one-stop development platform dedicated to quickly building AI applications, including model training, inference optimization, deployment, and model management The entire process of AI application development and launch, such as cloud native management and cloud native management, used to take several months to complete the development of AI applications, but under the NVAIE 3.0 platform, it can even be completed in a few hours.

From the figure we can see the key features of the platform at four levels:

Including the upper-level workflow, framework and pre-training model: At the level of the application scenario, clearly define the input and output, and preset the pre-training model to quickly complete the AI application development of typical application scenarios and support model development and deployment: Application development Closed loop of tools to complete the complete life cycle of machine learning models from development to deployment, including low-code migration learning tool TAO, mainstream deep learning framework TF/Pytorch, reasoning acceleration TensorRT framework, reasoning service engine and other cloud-native architectures, supporting hybrid Cloud deployment: integration of GPU and DPU in k8s, optimization of a large number of infrastructure such as MLOps tools: including GPU virtualization, RDMA-based storage access acceleration, underlying CUDA optimization, etc.

Let’s expand the first two layers to see how NVAIE solves the pain points in AI application development.

For example, as we mentioned earlier, after we have trained a good model checkpoint, we need to convert it into a deployable ONNX model through TensorRT. At this time, we often encounter the problem of missing operands. Under the NVAIE 3.0 platform, the model you have trained will be easily converted to the ONNX model with the support of the TAO components in the second layer of the platform, without worrying about the lack of operands and customized development.

For another example, the pain point of model quantification we mentioned earlier is also solved in the second layer of NVAIE – the quantized INT8 model can be directly obtained through the second layer of TAO components, no need to worry about the cumbersome quantization process And the problem of loss of quantization precision.

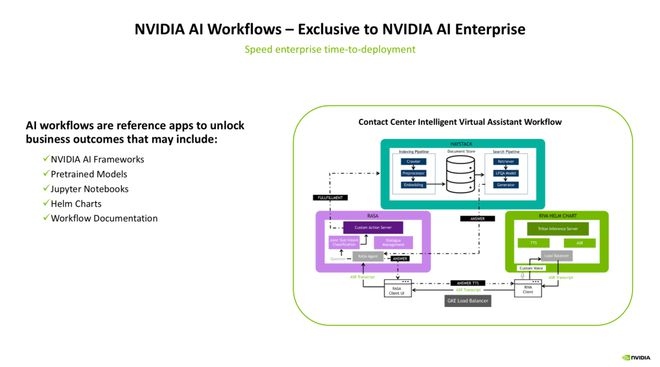

And like some typical AI application scenarios, such as smart customer service, the workflow of application development is preset on the top layer of the platform:

Take the workflow of the smart virtual assistant in the figure above as an example. Let’s see how a typical workflow works.

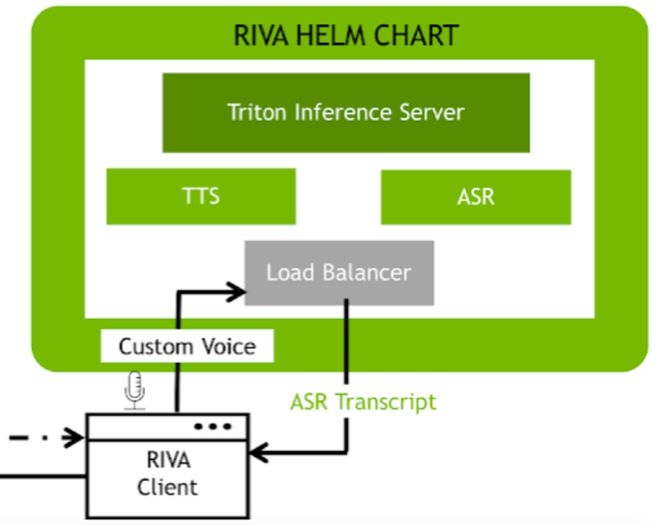

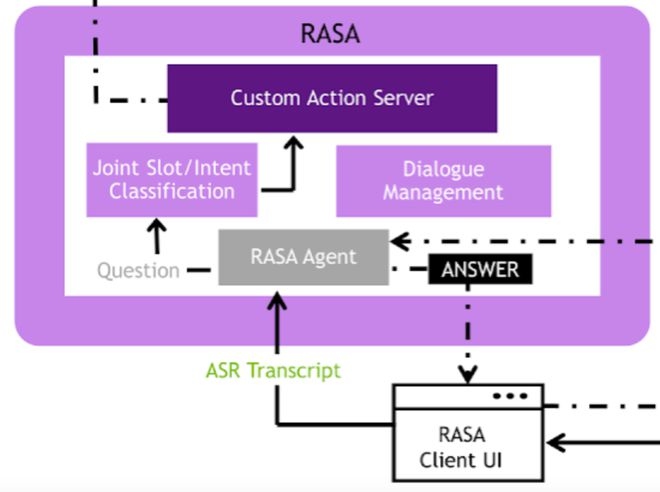

The bottom right corner is a RIVA-based workflow, in which the speech-to-text (ASR) and text-to-speech (TTS) operations will be completed as the “input preprocessing” and “output preprocessing” operations of the smart virtual assistant . Then, the user input obtained through RIVA will be input into the RASA workflow on the left.

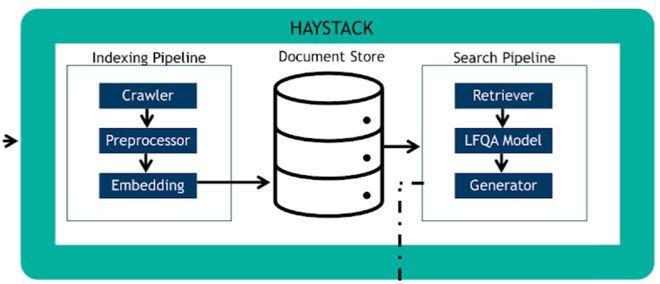

RASA is an open source dialogue robot framework. Here, the user’s voice input converted into text will go through the basic NLU module to perform operations such as word segmentation, intent understanding, and slot filling to obtain a structured semantic understanding result. The results will be input to the internal dialog management (DM) module for tracking and management of dialog status. After obtaining the result of semantic understanding, the result will be thrown to the top HEYSTACK workflow in the figure to obtain a candidate reply suitable for answering the user through answer retrieval, and the reply will eventually be passed back to the RIVA workflow , to generate a voice reply through the TTS module.

In this way, the entire operation process of a complex intelligent virtual assistant is completed. If you want to build such a complex system from scratch, it often takes several months, but based on the workflow that has been defined on the NVAIE platform, you can even realize the whole process at the hour level, which can be said to have greatly liberated AI application development. productive forces.

In addition, the platform also has built-in voice transcription workflow, digital security protection certification workflow, etc., and the platform is continuously designing workflows for more application scenarios. In the future, there may be map-based workflow and OCR-based workflow. Wait, it’s worth looking forward to!

It is worth noting that in order to speed up the development efficiency of AI applications and improve the final AI application effect, the platform also has built-in a large number of pre-training models (such as the advanced pedestrian detection model PeopleNet), and these pre-training models are all unencrypted , If the weight is completely open, the user can use it to “warm up” the AI model, and mark the scene-based data to fine-tune the model weight.

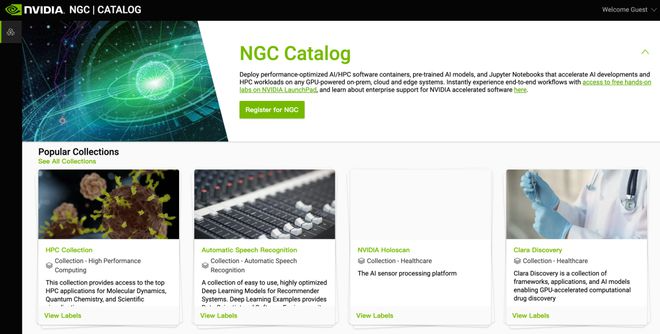

And these pre-trained models cover a wide range of application scenarios. In terms of car recognition alone, various models such as vehicle recognition, license plate recognition, and model recognition are built in. With the blessing of the pre-training model, the development progress of AI applications can be greatly accelerated. Those who are interested can check the available pre-training models in NVIDIA’s NGC catalog:

In addition to the optimization at the above model level, NVAIE 3.0 also optimizes the concurrency, reliability, and GPU usage of AI services, which are time-consuming and troublesome for enterprise developers. matter. It can be said that the NVAIE platform realizes the packaging of development kits at the operating system level, enabling enterprise users to obtain the efficiency, effectiveness and security guarantee of the complete life cycle of AI application development.

Not to mention, as the world‘s leading GPU manufacturer, NVIDIA has hard-core “Know How” capabilities at the GPU level, which enables the NVAIE platform to achieve true closed-loop optimization, which is very important for efficient application development. Important guarantee.

In terms of cloud native, NVAIE 3.0’s support for hybrid cloud deployment can meet the different deployment needs of enterprises. It also provides excellent service support, including three-year long term support, so that enterprises can feel more convenient and safe in the process of using NVAIE 3.0.

In general, NVIDIA’s NVAIE 3.0 is a very good AI development platform, and its appearance has brought an “efficiency revolution” to the development of AI applications in enterprises. We look forward to NVAIE 3.0 being able to help more companies realize their AI dreams in the future.